Quick decisions make us stuck in a traffic jam

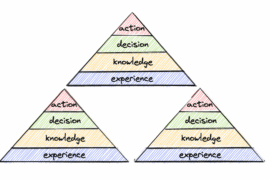

We know that as engineers we have to make plenty of decisions every day, smaller or bigger ones. Some of them may affect thousands of end customers that use our system. We also are aware that each decision comes with a pressure to make it fast. But quick decisions may be followed but unwanted consequences.

Let’s look at an example of another field: city planning. When we have big congestion on the road, what would be the intuitive approach to solve the issue? Probably many would say: widen the roads, add more lanes. And there is a theory of induced demand that tells exactly why this is a bad idea. This video explains it nicely. Long story short – the more roads we have, the more people would choose a car if they don’t have to, resulting in the same congestion level.

This kind of instinctive thinking almost resulted in Amsterdam converting its canals into six-lane highway by following the Jokinen Plan!

Thinking too fast makes us overpay for a baseball bat

If we would like to understand why this happens we may lean towards an idea of principle of least effort. The basic idea is that our brains tend to choose the easiest possible option. Daniel Kahneman calls it fast thinking.

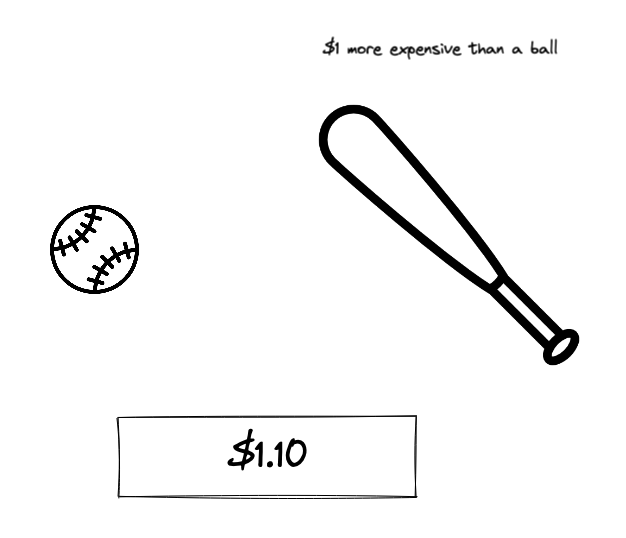

To show it in practice let’s solve a puzzle. Here’s the question, but make sure to answer it quickly, let’s say you have 3 seconds – otherwise it won’t prove how our brains work in a rush.

Ready? So here’s the question:

A baseball bat and a ball cost $1.10 together, and the bat costs $1.00 more than the ball, how much does the ball cost?

Many people answer that the ball is $0.10 (unless they know the puzzle). So it is not, it’s $0.05.

x - the ball

$1.10 = x + (x + $1)

2x = $1.10 - $1

2x = $0.10

x = $0.05Can we do something about it?

When we understand this, we may suddenly feel helpless. How the hell am I supposed to make good decisions if my brain is so stupid? Fear not! There’s hope. We don’t use our fast thinking only (if we did, we would be dead already or still lived in caves), but there is also a slow and rational thinking. We just need to create an environment for it to flourish. We may enforce slow thinking by creating rules and frameworks that push us to strain a bit harder to switch the mode from instinctive to rational (such frameworks to make better decisions are referred to as “nudge” by Richard H. Thaler and Cass R. Sunstein, 2008).

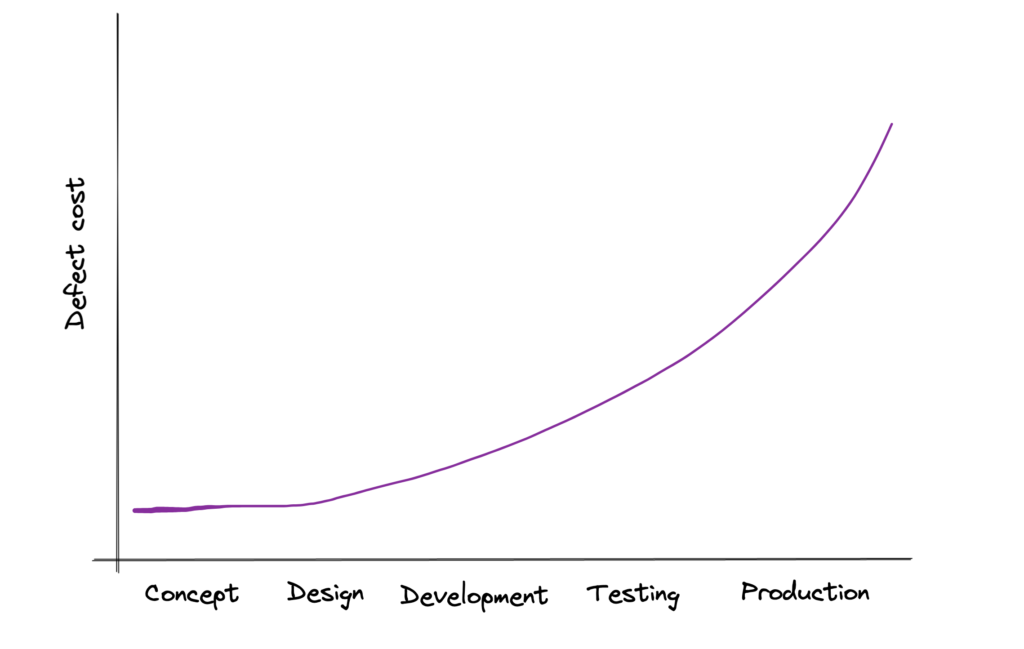

Multiple options

One such rule could be to always consider multiple options. Even if the first option seems good enough, it’s worth spending a little bit more time, just to make sure the idea is indeed good enough. The idea is to fail as cheaply as possible, so better to prove the solution to be bad still in the design phase rather than after the rollout. The concept is also called “shifting left” because if we consider the cost of the defect over time we would like to detect it as far to the left as possible. (Manshreck, Winters, Wright, 2020)

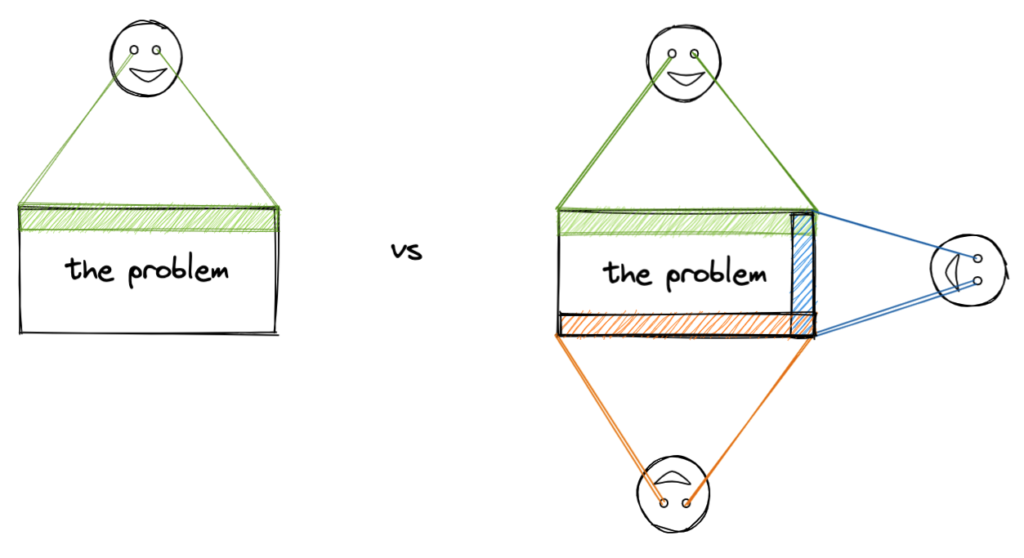

More eyes on the problem

The other thing we could do is to also try to always have at least two people look at the same problem. We may have good ideas and intuition but we are only humans after all – it’s possible to make mistakes. This additional set of eyes is our safety net when it comes to making better decisions. Therefore, we need to learn how to ask for help.

Of course this can also be frustrating and we may perceive others as obstacles that slow down our progress. This is something we need to learn how to deal with. One way to achieve this is to sometimes make a stop, look back and celebrate the progress.

On the technical side, having more people look at the problem could be achieved in multiple ways

- asking for feedback on chat tools e.g. Slack

- opening a draft PR or a RFC (request for comments) issue in GitHub

- creating an ADR (Architecture Decision Record).

Planning fallacy

Even if multiple people have looked at the solution, we still may fall into a planning fallacy – we may be too optimistic about the plan. It’s because it is much easier to imagine things that we have experienced, rather than imagine things that have never happened to us. We also focus on the future task, and we have a tendency to ignore similar things that took longer, and had many issues in the past.

The planning fallacy in practice may look like this:

- [Manager]: How much time do you think we need to implement this payment gateway?

- [Engineer]: I think it should take 4 weeks. Maybe let’s add one more as a buffer.

- [Manager]: Oh, that sounds nice. Let’s put into a roadmap. By the way, have you implemented such gateways in the past?

- [Engineer]: Yes, many times. I have experience.

- [Manager]: Good to hear that. How long it usually took?

- [Engineer]: Hmm, … 3-4 months.

Premortem analysis

Fortunately there are some ideas on how to counteract this problem. We have to activate our rational brain to be creative about the challenges that may occur. This approach is called Premortem analysis (analogously to the postmortem – this one takes place before the event). People sit down right after agreeing on the solution and imagine that given the option that has been chosen, they imagine the project failed in X years/months. The main goal is to list out as many reasons as possible why it failed.

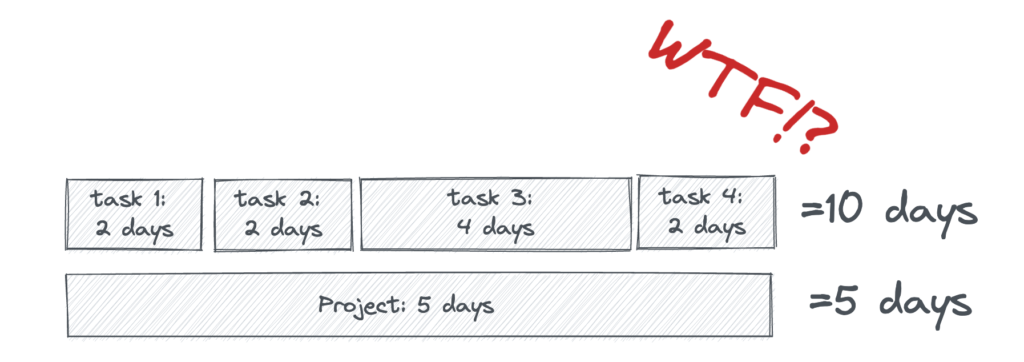

Segmentation effect

We may also try to split the problem into smaller chunks. When it comes to the time estimation it is usual that the overall estimation for a project as whole is usually lower than the sum of smaller subtasks (Burt, Forsyth, 2008).

Summary

We, as engineers, have to make a lot of decisions every day. We have to be aware that the first thing that comes to our minds may not be the best possible option. We are prone to biases and heuristics. Our thinking could be split into fast and slow thinking. If we don’t activate the slow, rational brain, we may end up making instinctive decisions like converting Amsterdam canals into six-lane highways. This happens because our brain follows the principle of least effort, which means that it automatically gives the easiest answer.

We can try to counteract these quick decisions by creating frameworks that force us to activate the rational part of our brains.

We may for example enforce the rule to always consider multiple options and to ultimately review the final option by multiple people (for example using ADRs).

But even if working together we can still fall into a planning fallacy. Therefore we may conduct premortem analysis (which in opposition to postmortem is done before the event) and think why the project failed because of our solution.

To avoid overoptimistic estimation we may also split the big project into smaller chunks.

Do you have any other ideas how to defend against intuitive but suboptimial solutions? Please share your thoughts in the comments.

References

- Forsyth, D.K., Burt, C.D.B. (2008), Allocating time to future tasks: The effect of task segmentation on planning fallacy bias. Memory & Cognition 36, 791–798. https://doi.org/10.3758/MC.36.4.791

- Kahneman, D. (2011). Thinking, fast and slow. Farrar, Straus and Giroux.

- Klein, G. (2007). “Performing a Project Premortem“. Harvard Business Review. 85 (9): 18–19.

- Manshreck, T., Winters, T., Wright, H., Software Engineering at Google, O’Reilly Media, Inc, 2020, chapter 1

- Thaler, R. H., & Sunstein, C. R. (2008). Nudge: Improving decisions about health, wealth, and happiness. Yale University Press.

links

- Principle of least effort – Wikipedia

- Planning fallacy – Wikipedia

- Induced demand – Wikipedia

- How highways make traffic worse – YouTube – Vox

- ADR

attribution